ARTag

An ARTag is a type of AR (Augmented Reality) tag that can be used to mark object positions in a three-dimensional scene or to assist robots with localization and navigation. By placing QR code markers in the environment, robots can scan these markers to obtain information about their location and use this information to plan paths and execute tasks.

ar_track_alvar Package

- The functionality for AR tag recognition is implemented through the open-source

ar_track_alvarpackage. For more details, refer to the official documentation. This package enables the detection and localization of single or multiple ARTags. - Package Path

~/robot_ros_application/catkin_ws/src/ar_tag_detect/ar_track_alvar

Node Startup

The ar_track_alvar node requires numerous parameters such as the size of the ARTag, the topic of the camera image, the topic of the camera intrinsic parameters, and tracking error, so it is typically run using a launch file.

To start the recognition nodes for the chin camera and the head RGBD camera, use the following commands respectively:

Head Camera

source ~/robot_ros_application/catkin_ws/devel/setup.bash

roslaunch ar_track_alvar roban_head_camera.launchChin Camera

source ~/robot_ros_application/catkin_ws/devel/setup.bash

roslaunch ar_track_alvar roban_chin_camera.launch

Launch File Paths

Head Camera

- /home/lemon/robot_ros_application/catkin_ws/src/ar_tag_detect/ar_track_alvar/ar_track_alvar/launch/roban_head_camera.launch

Chin Camera

- /home/lemon/robot_ros_application/catkin_ws/src/ar_tag_detect/ar_track_alvar/ar_track_alvar/launch/roban_chin_camera.launch

Single Tag Detection Launch File Configuration

When only single tag detection is required, a common configuration file is as follows (using the chin camera's launch file as an example):

```xml

<launch>

<group ns="chin">

<arg name="marker_size" default="4.4" />

<arg name="max_new_marker_error" default="0.08" />

<arg name="max_track_error" default="0.2" />

<arg name="cam_image_topic" default="/chin_camera/image" />

<arg name="cam_info_topic" default="/chin_camera/camera_info" />

<arg name="output_frame" default="/chin_camera" />

<node name="ar_track_alvar" pkg="ar_track_alvar" type="individualMarkersNoKinect" respawn="false" output="screen">

<param name="marker_size" type="double" value="$(arg marker_size)" />

<param name="max_new_marker_error" type="double" value="$(arg max_new_marker_error)" />

<param name="max_track_error" type="double" value="$(arg max_track_error)" />

<param name="output_frame" type="string" value="$(arg output_frame)" />

<remap from="camera_image" to="$(arg cam_image_topic)" />

<remap from="camera_info" to="$(arg cam_info_topic)" />

<remap from="visualization_marker" to="visualization_marker_chin" />

<!-- Remap the original result output topic name visualization_marker to visualization_marker_chin. The result is published under the chin group as /chin/visualization_marker_chin -->

</node>

</group>

</launch>

```

- marker_size -- The width of the ARTag code (in centimeters)

- max_new_marker_error -- The error threshold for detecting new tags

- max_track_error -- The tracking error threshold

- camera_image -- The topic of the input image, which can be monochrome or color, but should be unrectified as rectification is performed within this package

- camera_info -- The name of the topic that provides camera calibration parameters to rectify the image

- output_frame -- The name of the tf coordinate system to which the AR tag coordinates will be relative

Multiple Tags Combined Detection

ar_track_alvarallows multiple ARTag codes to be affixed to the same object and treated as a single entity during recognition. To "bundle" multiple tags, abundle_filesparameter must be passed in the launch file. The value of this parameter should be an XML format file that defines the coordinates of the four corners of each tag in the bundle. An example is as follows:<?xml version="1.0" encoding="utf-8"?>

<multimarker markers="11">

<marker status="1" index="10">

<corner z="0" x="-62.5" y="-32.5"></corner>

<corner z="0" x="-57.5" y="-32.5"></corner>

<corner z="0" x="-57.5" y="-27.5"></corner>

<corner z="0" x="-62.5" y="-27.5"></corner>

</marker>

<marker status="1" index="1">

<corner z="0" x="27.5" y="-2.5"></corner>

<corner z="0" x="32.5" y="-2.5"></corner>

<corner z="0" x="32.5" y="2.5"></corner>

<corner z="0" x="27.5" y="2.5"></corner>

</marker>

.

.

.

<marker status="1" index="8">

<corner z="0" x="-2.5" y="27.5"></corner>

<corner z="0" x="2.5" y="27.5"></corner>

<corner z="0" x="2.5" y="32.5"></corner>

<corner z="0" x="-2.5" y="32.5"></corner>

</marker>

</multimarker>- In this example, the

<multimarker markers="11">tag defines that the bundle contains a total of 11 ARTag codes. - Under the

multimarkertag, themarkertag lists the x, y, z coordinates of the four corner points of each ARTag code, with theindexattribute filled with the id value of the individual ARTag code.

- In this example, the

After creating the

bundle_files, add abundle_filesparameter in the single tag detection launch file, specifying the path to the definedbundle_filesfile. Running this will enable joint localization of multiple tags.An example of multi-tag detection using the chin camera is located at:

- Launch File Path:

/home/lemon/robot_ros_application/catkin_ws/src/ar_tag_detect/ar_track_alvar/ar_track_alvar/launch/roban_chin_camera_multi.launch - Bundle Files Path:

/home/lemon/robot_ros_application/catkin_ws/src/ar_tag_detect/ar_track_alvar/ar_track_alvar/launch/MultiMarkerMap.xml - The positions of the tags in the

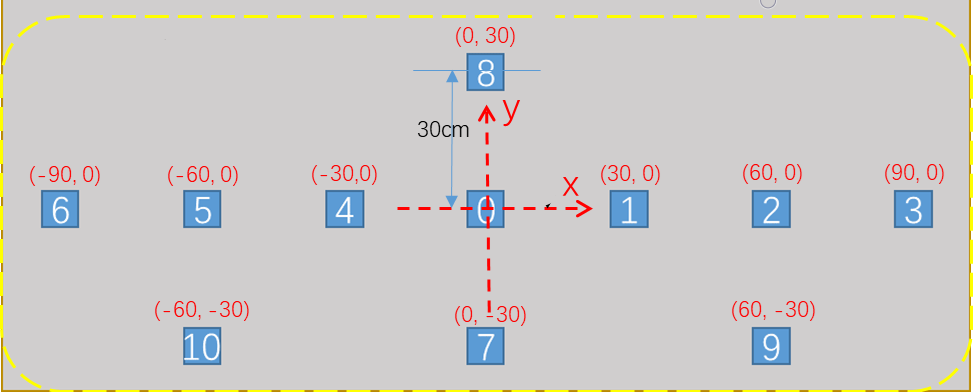

bundle_filesfile are shown in the figure below::

- To execute multi-tag recognition, run the following commands:

source ~/robot_ros_application/catkin_ws/devel/setup.bash

roslaunch ar_track_alvar roban_chin_camera_multi.launch

- Launch File Path:

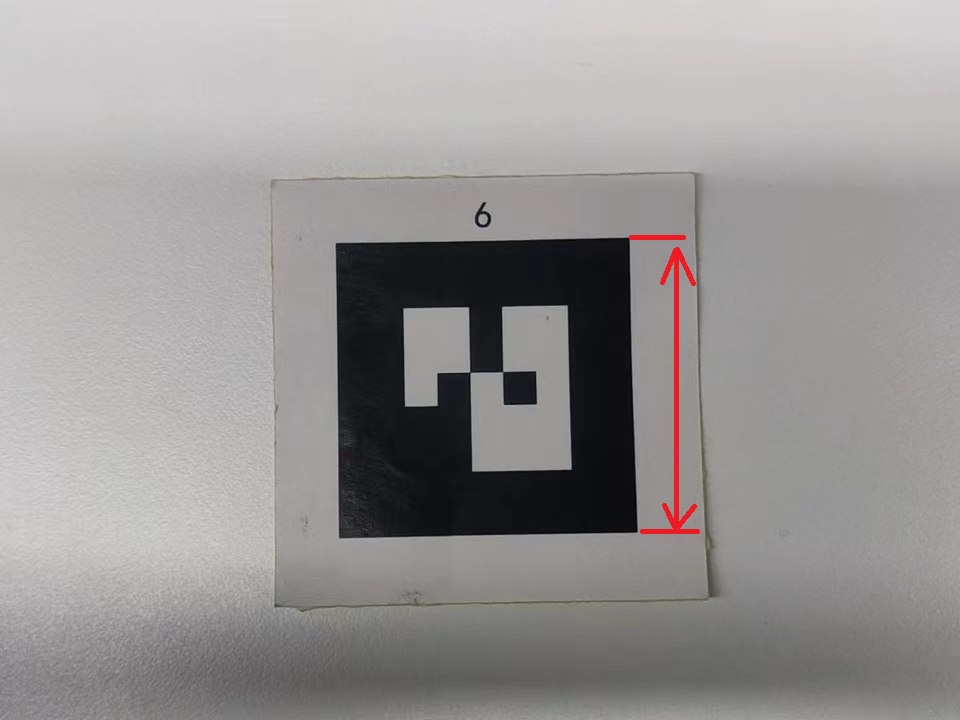

Calibrating ARTag Size

The size of the ARTag should be the actual side length of the printed ARTag (square).

Tips:You can edit the width and height of the image in Word and then print it at "Actual Size" or "100% Size" to obtain a code of the specified size.

Modify the

marker_sizeparameter in the corresponding launch file configuration to the actual size of the printed ARTag.

Node Information

Node Names

Head Camera

- /head/ar_track_alvar

Chin Camera

- /chin/ar_track_alvar

Package Path

- ~/robot_ros_application/catkin_ws/src/ar_tag_detect/ar_track_alvar

Message Names

Head Camera

- /head/visualization_marker_head

Chin Camera

- /chin/visualization_marker_chin (Results for both single and multi-tag detection are published on the same topic)

Message Type

- visualization_msgs/Marker

Message Type Definition

uint8 ARROW=0

uint8 CUBE=1

uint8 SPHERE=2

uint8 CYLINDER=3

uint8 LINE_STRIP=4

uint8 LINE_LIST=5

uint8 CUBE_LIST=6

uint8 SPHERE_LIST=7

uint8 POINTS=8

uint8 TEXT_VIEW_FACING=9

uint8 MESH_RESOURCE=10

uint8 TRIANGLE_LIST=11

uint8 ADD=0

uint8 MODIFY=0

uint8 DELETE=2

uint8 DELETEALL=3

std_msgs/Header header

uint32 seq

time stamp

string frame_id

string ns

int32 id

int32 type

int32 action

geometry_msgs/Pose pose

geometry_msgs/Point position

float64 x

float64 y

float64 z

geometry_msgs/Quaternion orientation

float64 x

float64 y

float64 z

float64 w

geometry_msgs/Vector3 scale

float64 x

float64 y

float64 z

std_msgs/ColorRGBA color

float32 r

float32 g

float32 b

float32 a

duration lifetime

bool frame_locked

geometry_msgs/Point[] points

float64 x

float64 y

float64 z

std_msgs/ColorRGBA[] colors

float32 r

float32 g

float32 b

float32 a

string text

string mesh_resource

bool mesh_use_embedded_materials

Result Output and Parsing

After running the launch file for chin camera detection, we can use the command

rostopic echo /chin/visualization_marker_chinin the terminal to view the ARTag recognition results in real-time. Here is an example of a data entry for parsing:header:

seq: 809

stamp:

secs: 1671697367

nsecs: 476323108

frame_id: "chin_camera" # Coordinate system of the output result

ns: "basic_shapes" # Result type: basic_shapes for single ARTag result, main_shapes for multiple ARTag code joint detection result

id: 6 # ARTag code number

type: 1

action: 0

pose:

position:

x: -0.00860899694651 # QR code perpendicular to the camera, positive direction to the right

y: -0.0696511716506 # QR code perpendicular to the camera, positive direction upwards

z: 0.271939015273 # QR code perpendicular to the camera, positive direction forward

orientation: # Quaternion

x: 0.986290075697

y: 0.026093318553

z: -0.0374334556082

w: 0.158586763981

scale:

x: 0.044

y: 0.044

z: 0.0088

color:

r: 0.5

g: 0.0

b: 0.5

a: 1.0

lifetime:

secs: 1

nsecs: 0

frame_locked: False

points: []

colors: []

text: ''

mesh_resource: ''

mesh_use_embedded_materials: FalseWhen performing multi-tag detection, the results of single tag detection are also output simultaneously. It is necessary to distinguish and process based on the

nsattribute of the obtained message.

Subscribing to Messages (Python Implementation)

```python

#!/usr/bin/env python

# -*- coding: utf-8 -*-

import rospy

from visualization_msgs.msg import Marker

import math

import numpy as np

import scipy.linalg as linalg

ARTAG_CHIN_TOPIC = "/chin/visualization_marker_chin" # Subscribe to the chin camera

class ArtagData():

def __init__(self):

self.topic_name = ARTAG_CHIN_TOPIC

rospy.Subscriber("/chin/visualization_marker_chin", Marker, self.chin_marker_callback)

def quart_to_rpy(self, w, x, y, z): # Convert quaternion to Euler angles

r = math.atan2(2*(w*x+y*z), 1-2*(x*x+y*y))

p = math.asin(2*(w*y-z*x))

y = math.atan2(2*(w*z+x*y), 1-2*(z*z+y*y))

return [r, p, y]

def rotate_mat(self, axis, radian): # Correct image rotation for more accurate x, y, z values

rot_matrix = linalg.expm(np.cross(np.eye(3), axis / linalg.norm(axis) * radian))

return rot_matrix

def chin_marker_callback(self,msg):

rpy = self.quart_to_rpy(msg.pose.orientation.w, msg.pose.orientation.x, msg.pose.orientation.y, msg.pose.orientation.z)

cam_rot = self.rotate_mat([0, 1, 0], 10 * math.pi / 180.0) # Rotate around the robot's y-axis

pos_in_torso = np.dot(cam_rot, np.array([[-msg.pose.position.y], [-msg.pose.position.x], [msg.pose.position.z]])).tolist()

rospy.loginfo("\nresult type: {}\ny: {} x: {} z: {}\nroll: {} pitch: {} yaw: {}\n======".format(msg.ns if msg.ns == "basic_shapes" else "\033[92m"+msg.ns + "\033[00m" , pos_in_torso[0][0], pos_in_torso[1][0], pos_in_torso[2][0], rpy[0], rpy[1], rpy[2]))

if __name__ == "__main__":

rospy.init_node("artag_data")

ad = ArtagData()

rospy.spin()

```

After running the launch file for chin camera detection and then running the above example program, place the ARTag code in front of the robot's chin camera. You will see the terminal print the current position and orientation angles of the ARTag code relative to the camera.

Using tf2 for Coordinate System Transformation

By default, the pose information returned through "/chin/visualization_marker_chin" or "/head/visualization_marker_head" is in the camera coordinate system, meaning the obtained position is the tag's position relative to the robot. If you need to obtain the robot's position relative to the tag, you can use

tf2_rosto transform from the robot coordinate system to the tag coordinate system.In fact, the

ar_track_alvarpackage has already built the tf tree branch between the camera and the tag for us.- You can run the command

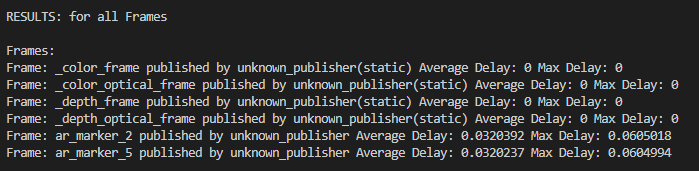

rosrun tf tf_monitorto print out all current tf tree nodes and their relationships.

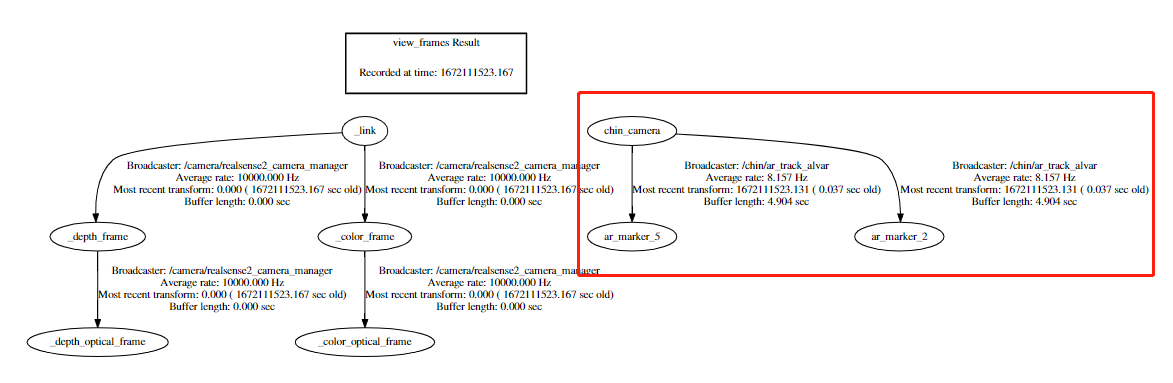

- You can also use the rviz visualization tool or the

rosrun tf view_framescommand to generate a tf tree connection diagram. The right side of the above figure shows the transformation relationship between the current chin_camera and the coordinates of the two ARTag codes (ar_marker_n).

The right side of the above figure shows the transformation relationship between the current chin_camera and the coordinates of the two ARTag codes (ar_marker_n).When using multi-tag detection, the name of the tf coordinate system for the tag set is the name of the first ARTag code defined in the bundle_files (ar_marker_n).

- You can run the command

To obtain the robot's position in the tag coordinate system, here is an example:

#! /usr/bin/env python

# coding=UTF-8

import rospy

import tf2_ros

import tf

from tf2_geometry_msgs import tf2_geometry_msgs

if __name__ == '__main__':

rospy.init_node('tf_listener')

buffer = tf2_ros.Buffer() # Create a buffer object

sub = tf2_ros.TransformListener(buffer) # Create a subscriber object, passing in the buffer

rate = rospy.Rate(10.0)

while not rospy.is_shutdown():

try:

now = rospy.Time.now()

ps_out = buffer.lookup_transform("ar_marker_2", "chin_camera",rospy.Time())#Get the tf transformation relationship

print("{}\n{}".format("==="*10,ps_out))

except (tf.LookupException, tf.ConnectivityException, tf.ExtrapolationException)as e:

continue

rate.sleep()The

lookup_transformfunction can conveniently obtain the transformation relationship between various coordinates, such as obtaining the position of chin_camera relative to ar_marker_2 in the example.